Greetings friends! In this third White Paper, Realist(ic) Evaluation Tools for OST Programs: The Quality-Outcomes Design and Methods (Q-ODM) Toolbox, we extend from the neuroperson framework for socio-emotional skills to a focus on evaluation design and impact evidence. Focusing on the methods used to evaluate out-of-school time (OST) programs and to assess the impact on student skill growth is a critical issue, especially given the ambiguity about impacts from gold-standard evaluations of publicly funded afterschool programs. Are programs producing weak or no effects? Or, are gold-standard designs missing something?

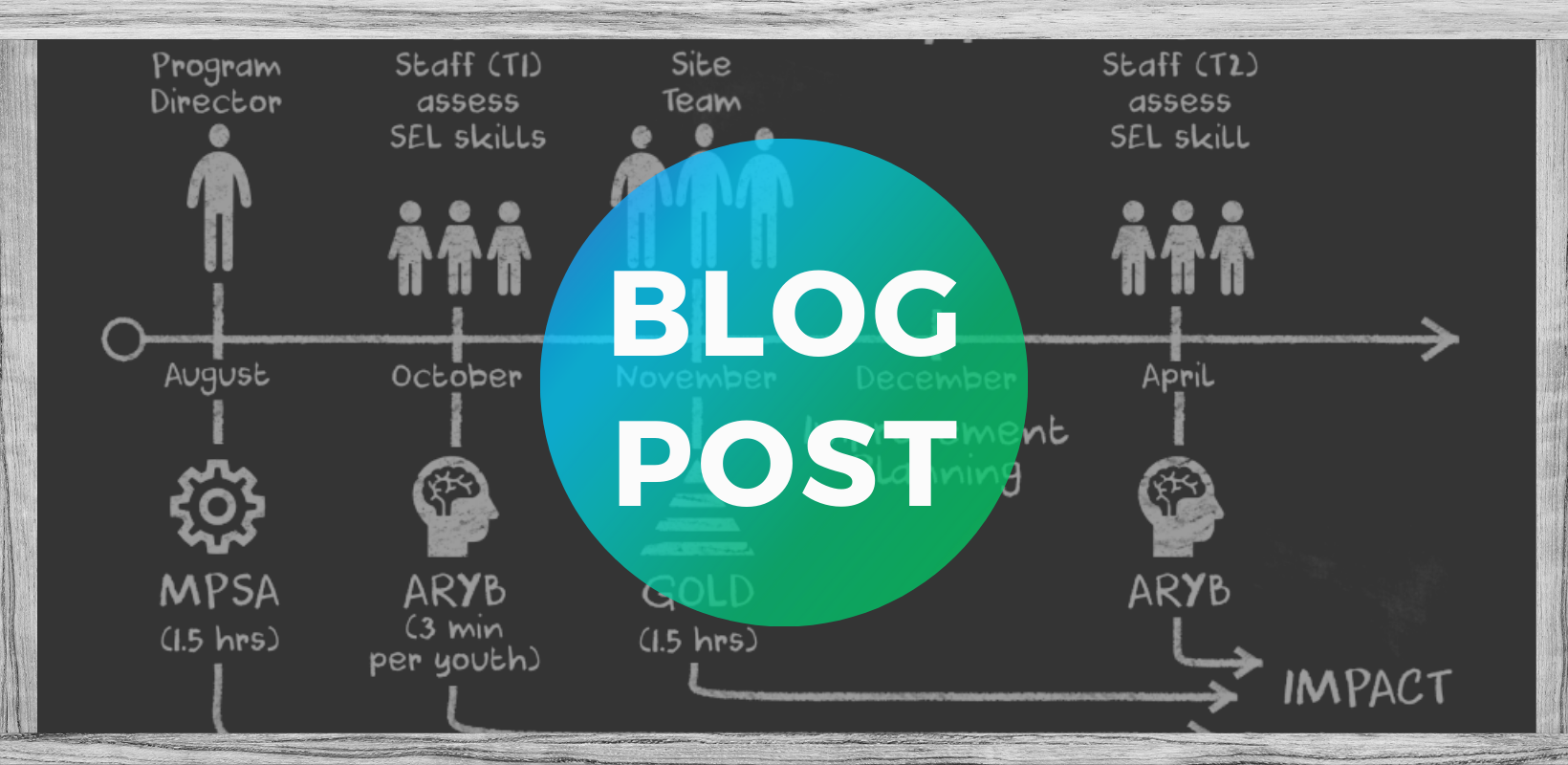

We offer a sequence of evaluation questions that chart the course to realistic evidence about quality and outcomes (i.e., cause and effect, or “how” and how much”) – and is useful to managers, teachers, coaches, and evaluators. We’ve learned these questions over the past two decades by asking tens of thousands of afterschool, early childhood, and school day teachers about how data and results about their own work works best for them.

Getting the evaluation questions right calls for measurement and analytics tools that:

…reflect the assumption that children have mental skills that are causes of their behavior…. These mental skills are conceived of as several different aspects of mental functioning (i.e., schemas, beliefs, & awareness) that exist within every biologically-intact person, enable behavioral skills, and can be assessed, more or less accurately, using properly-aligned measures. When the parts and patterns of skill are reflected in theory and measures, the accuracy and meaningfulness of data about program quality and SEL skill – and all subsequent manipulations and uses of the data – are dramatically improved.

Our thinking is deeply anchored in pattern- and person-centered science. Check out a related blog here: Why are Q-ODM’s Pattern-Centered Methods (PCM) More Realistic and Useful?

Finally, we provide data visualization examples that complete an unbroken chain of encoded meaning, from the observation of students’ socio-emotional skills in an afterschool classroom, to the decoding of the data visualization by an end-user. We’re pleased to share these insights. Cheers!

P.S. For CEOs that need impact evidence: Why are gold-standard designs not as cost-effective as we might think? Elsewhere, we have argued that gold-standard designs for afterschool programs are misspecified models because they lack key moderator and mediator variables (e.g., instructional quality and socio-emotional skills). For example, the large impacts (often equity effects, as predicted by the neuroperson framework) that we typically find for students who start programs with lower socio-emotional skills but who receive high-quality instruction cannot be detected using most gold-standard designs. As a result, it is difficult (or impossible) to analogize from the results of gold-standard designs to the real actions taken by real people; thus, those designs are not very cost effective for improvement or for telling compelling stories about impact. Check out a related blog here: How the Q-ODM impact model is a more cost-effective form of the quasi-experimental design (QED).